Related Questions:

– What is Gradient Boosting (GBM)?

– What is a Random Forest?

– GBM vs Random Forest: which algorithm should be used when?

Gradient Boosting Machines and Random Forest are both popular tree-based machine learning algorithms used for supervised learning tasks such as classification and regression. However, they differ in several key ways as shown below:

| Attribute | Difference between Random Forest and Gradient Boosting |

|---|---|

| Ensemble Method | Random Forest (RF) is an ensemble method that combines multiple decision trees to make a final prediction. While Gradient Boosting (GBM) is also an ensemble method, it works differently by combining multiple weak learners, usually decision trees, through an iterative process. |

| Training process | RF builds each decision tree independently, while GBM builds decision trees in sequence, with each tree attempting to improve the performance of the previous tree. |

| Interpretability | RF can provide a measure of feature importance that reflects the contribution of each feature to the overall model performance. This measure can be used to identify the most important features for the target variable and to interpret the model's predictions. GBM can also provide a measure of feature importance, but the interpretation of GBM is more complex because it involves the combination of multiple weak learners through an iterative boosting process. |

| Overfitting | RF is less prone to overfitting than GBM, because it builds multiple trees and averages their predictions, which reduces the impact of individual trees that may overfit the data. GBM, on the other hand, can overfit the data if the number of trees or iterations is too high. Overfitting can be controlled in GBM by carefully tuning hyperparameters such as learning rate and maximum depth together. XGBoost also introduces additional regularization to further prevent overfitting to the training data. A well tuned GBM algorithm has very high accuracy and is often difficult to beat in terms of predictive performance on structured data sets. |

| Computation power | In Random Forest, multiple decision trees can be built simultaneously. The parallel operation leads to low computation time. However, in a traditional GBM algorithm, trees are built sequentially making it computationally less efficient. Alternative implementations of GBM such as LightGBM and XGBoost parallelize several operations leading to faster training process |

As shown in the above table, both GBM and Random Forest are ensemble methods, however, they differ in their training process, computational resources, model results, and interpretability. The choice between GBM and Random Forest depends on the specific characteristics of the dataset, the modeling objectives, model performance, and the available computing resources. It is usually advisable to try as many algorithms as possible and even consider an ensemble of multiple algorithms before making the final decision.

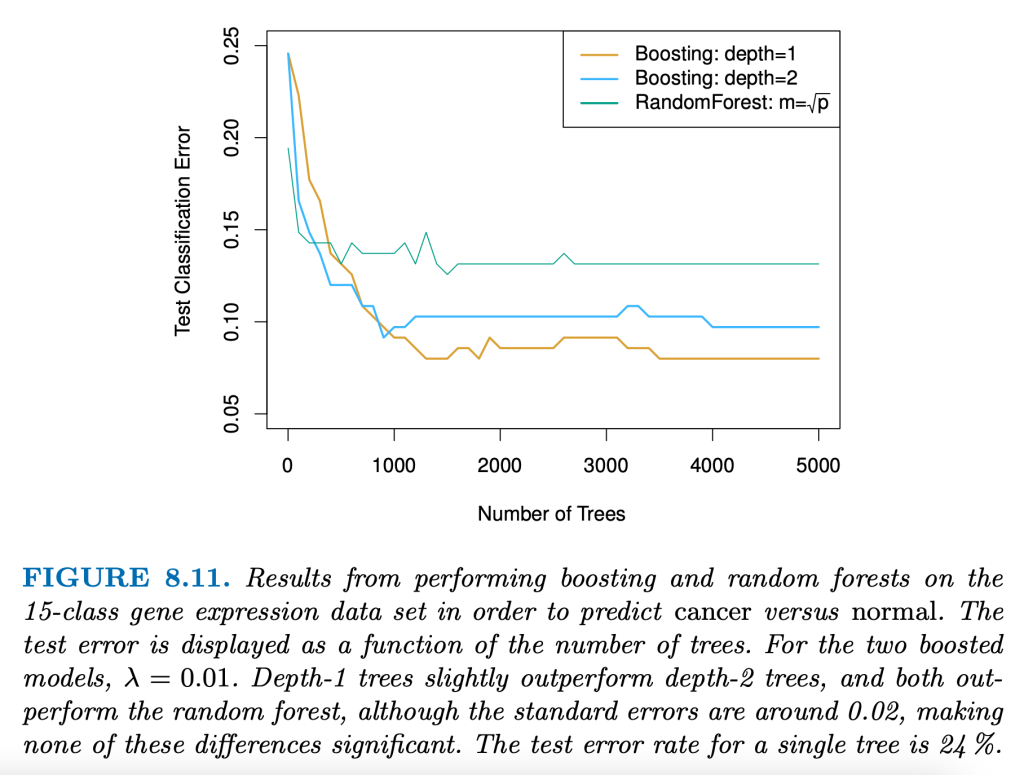

Illustrative example: Comparing performance of Gradient Boosting and Random Forest for a Cancer study

The following graph taken from the book ‘An Introduction to Statistical Learning’ is an illustrative example of performance of Boosting algorithms vs Random Forest trained on Gene expression data to predict cancer (binary classification problem) (lower classification error is better).

Note: This example is for illustration purposes only. The performance of different models can vary based on training data and modelling process

Video Explanation

- In the following video, Josh Stramer takes viewers on a StatQuest that motivates Boosting, and compares and contrasts it with Random Forest. Even though the video is titled “Adaboost”, it does explain the differences between Random Forest and Boosting.