Related articles:

– What is a Multilayer Perceptron (MLP) or a Feedforward Neural Network (FNN)?

– Explain the basic architecture of a Neural Network, model training and key hyper-parameters

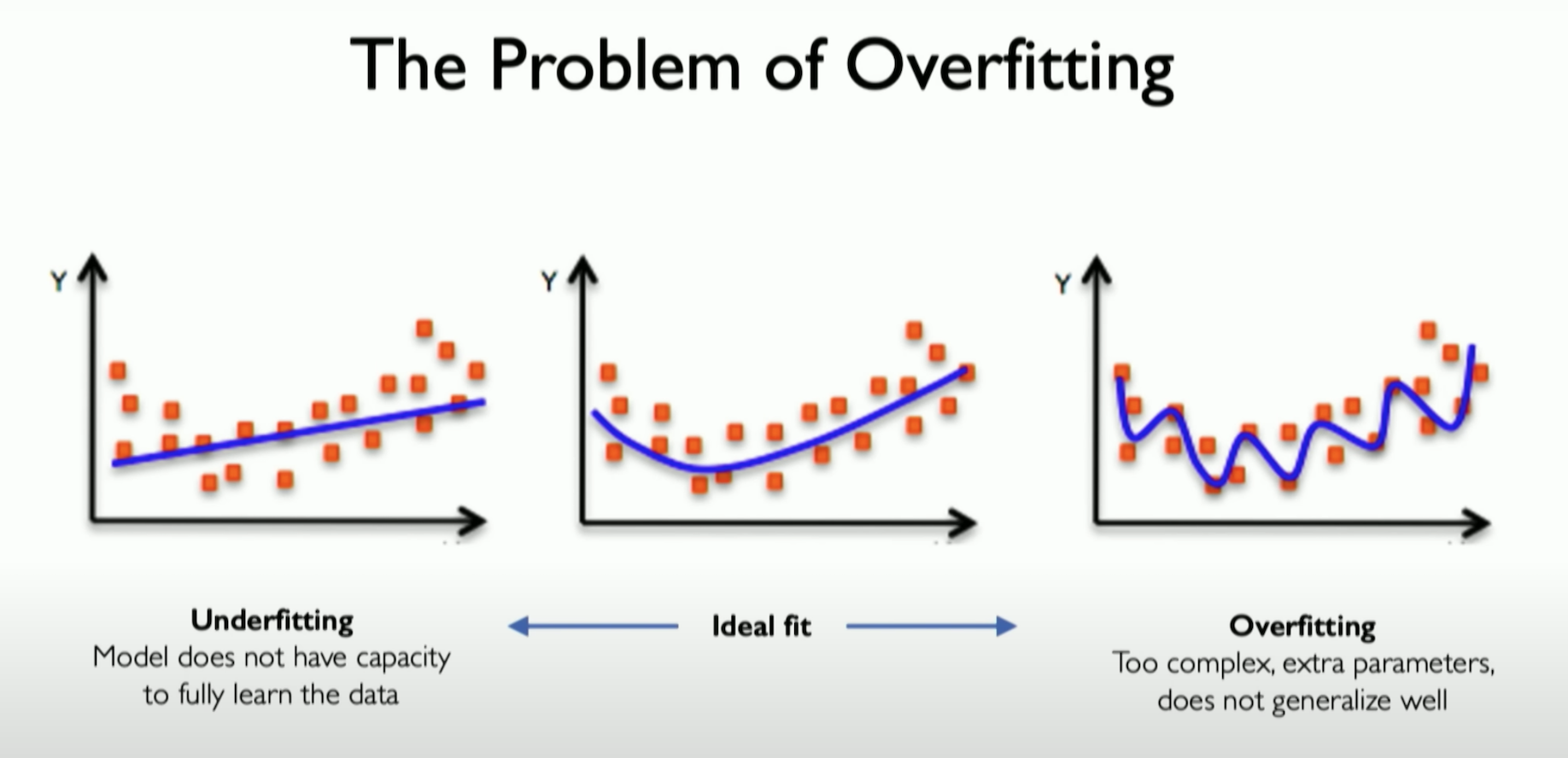

Title: Depicting Underfitting, Ideal fit, and Overfitting in Neural Network training

Source: MIT Deep Learning Course

Overfitting refers to a phenomenon when a machine learning model becomes too complex and starts fitting the training data too closely. This causes the model to learn the noise and random fluctuations in the training data instead of the underlying patterns and relationships that are relevant to the problem being solved. As a result, the model may perform very well on the training data but poorly on new, unseen data. Neural Network models are highly susceptible to the problem of overfitting.

How to mitigate overfitting in neural networks?

One of the main drawbacks of deep learning is that it is more prone to overfitting than more traditional machine learning models. However, there are some options at hand that can be employed to mitigate the risk of overfitting.

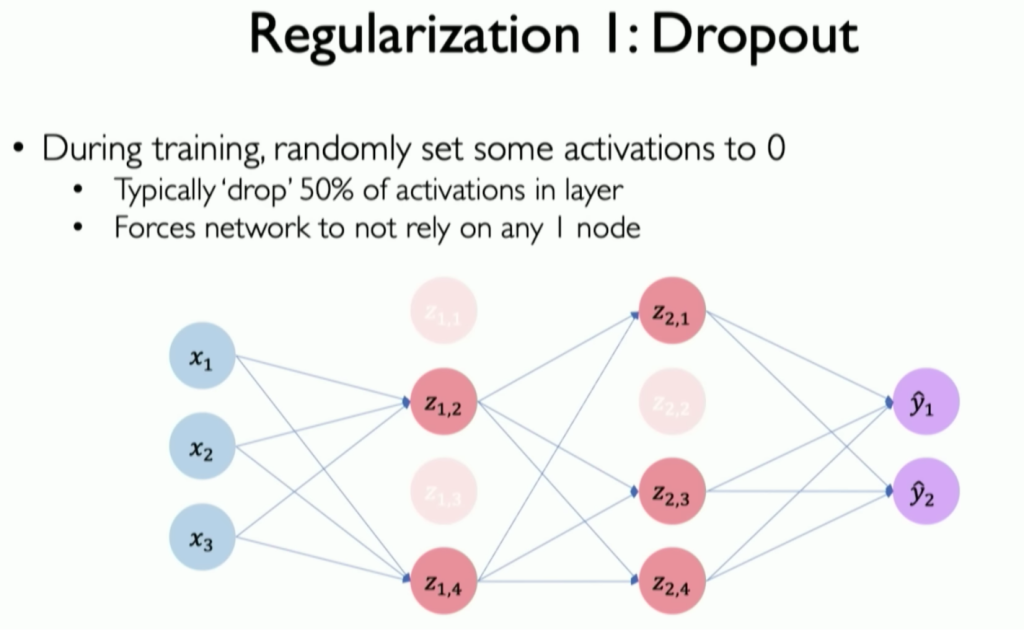

- Use Dropout

Dropout refers to randomly turning o a fraction of neurons during each training iteration, which helps prevent any single neuron from becoming overly specialized. Basically, each node within the hidden layers has a probability of being turned o, so if the network is trained over multiple iterations of the data, the data is fed through different but simpler networks that result in lower variance than if the same, more complex model was used in each pass. Thus, dropout essentially achieves the same reduction in variance as creating an ensemble of complex networks.

Title: Dropout in neural network

Source: MIT Deep Learning Course

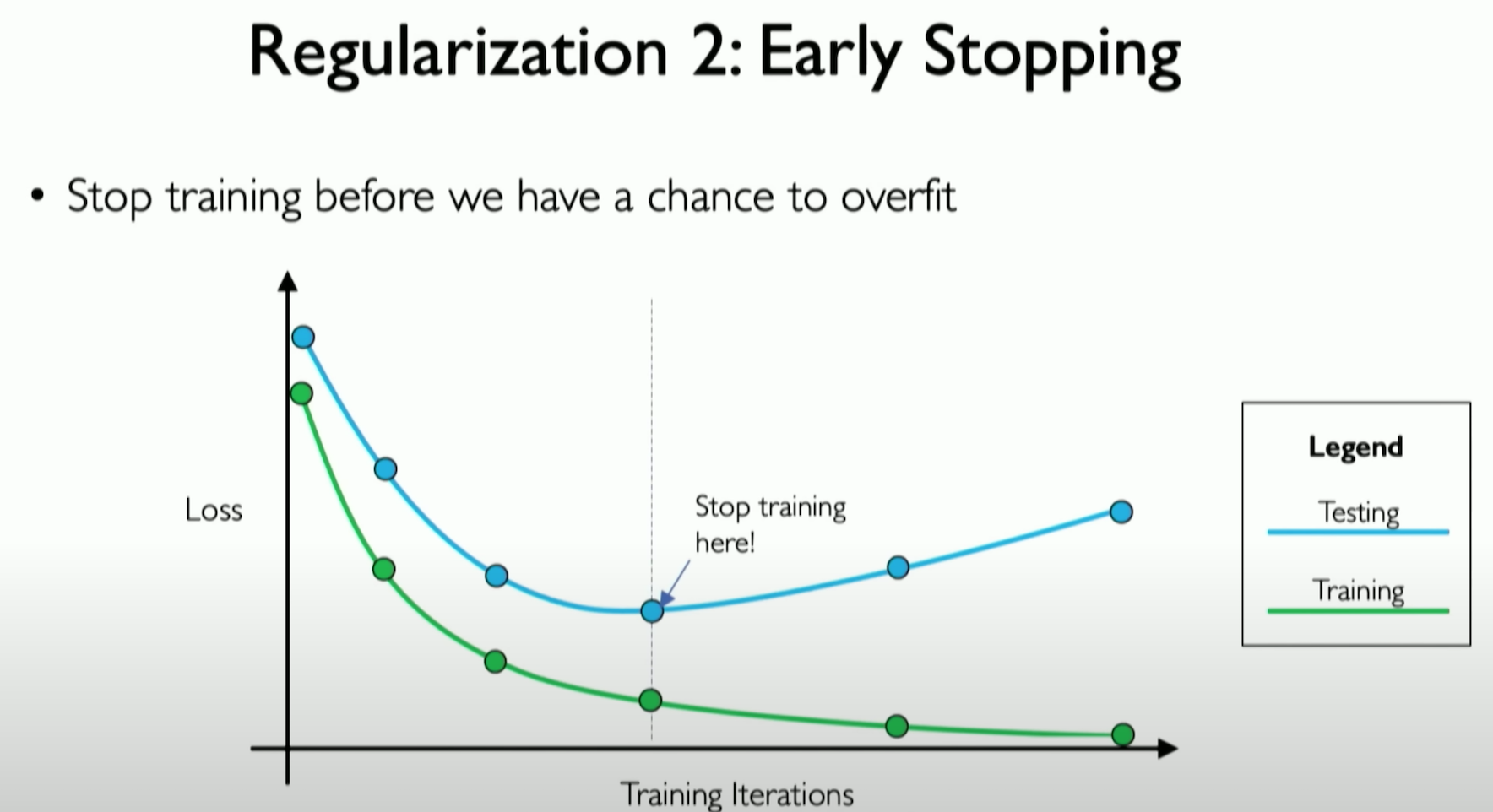

- Implement Early Stopping

Early stopping essentially terminates the training of a deep learning model if after a certain number of iterations, the magnitude of decrease in the loss function is within a small threshold. Using early stopping makes it possible to set the number of iterations to a large number, as assuming the loss function will eventually bottom out before the final iteration, the model is not trained all the way out. This can be very beneficial in conserving computing resources.

Title: Early stopping to prevent Overtting

Source: MIT Deep Learning Course

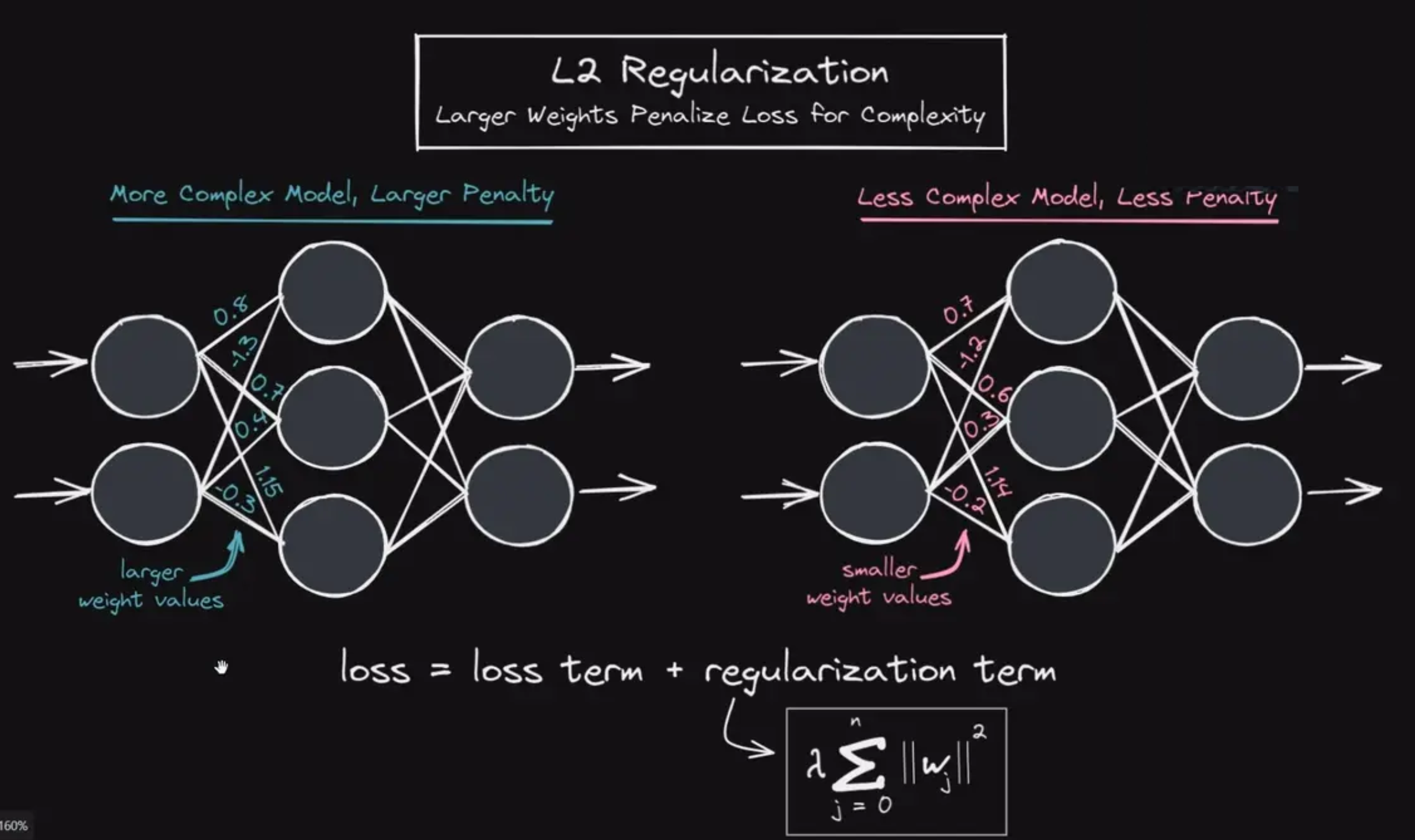

- Use L2 Regularization

The most common form of regularization used in deep learning is L2 regularization, which adds a squared penalty term to the loss function that has the effect of shrinking the magnitude of the weights. This discourages the model from assigning too much importance to any single feature, reducing model complexity.

Source: Deep Lizard

- Simpler Model Architecture

Reduce the complexity of the neural network by using fewer layers and neurons, which can make it less prone to overfitting.

- More Training Data

Increasing the size of the training dataset can help neural networks generalize better and reduce overfitting, as the model has more diverse examples to learn from.

- Gradient Clipping

Limit the magnitude of gradients during training to prevent exploding gradients, which can contribute to overfitting.

- Data Augmentation

Increase the diversity of the training data by applying transformations like rotation, scaling, or cropping, which can help the model generalize better.

- Batch Normalization

Incorporate batch normalization layers to stabilize training and improve generalization.

- Cross-Validation

Instead of splitting the dataset into just a training set and a test set, split it into multiple folds. Train the model on different combinations of these folds and validate on the remaining ones. This helps ensure that the model doesnʼt overfit to a particular subset of the data.

Video Explanation

- “Regularization in a Neural Network” by Assembly AI succinctly explains the problem of overfitting in neural networks and covers the concepts of regularization (L1 & L2), dropout, and early stopping for tackling overfitting (Runtime: ~12 mins)

- “Overfitting in a Neural Network explained” by Deep Lizard covers the problem of overfitting and use of Dropout and Data Augmentation techniques to prevent overfitting in neural network training (Runtime: 5 mins)