Related Questions:

– What are the primary advantages of transformer models?

– What are the limitations of transformer models?

– Explain the Transformer Architecture

– What is Natural Language Processing (NLP) ? List the different types of NLP tasks

Overview of Transformers

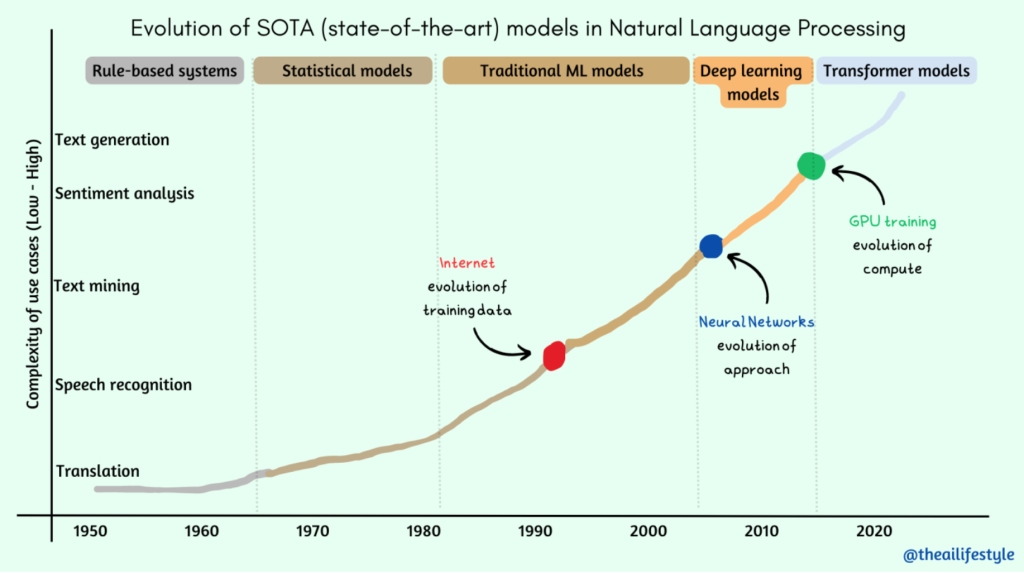

Transformers are a type of deep learning architecture designed for processing sequential data, such as text or time series, by capturing contextual relationships and dependencies between elements in the sequence. They have been particularly successful in the field of natural language processing (NLP) involving sequence to sequence learning tasks such as language translation, text generation, text summarization, sentiment analysis, and more.

The Transformer architecture was first introduced in the paper “Attention is All You Need” by Vaswani et al. in 2017. It departed from the traditional neural network algorithms like recurrent neural networks (RNNs), Long Short-Term Memory (LSTM) and convolutional neural networks (CNNs) commonly used for sequence tasks at that time.

Source: The AI Lifestyle

Advantages of Transformer models

Below are some of the key reasons how transformers out-performed their predecessors to become the foundational model driving significant advancements in the field of natural language processing (NLP):

- Parallelization: Self-attention enables parallel computation, leading to faster training and inference compared to sequential models like RNNs, LSTMs.

- Long-Range Dependencies: Transformers excel at capturing long-range dependencies in data.

- Scalability: Transformers can be scaled up to handle large datasets and complex tasks.

- Transfer Learning: Pretrained Transformer models can be fine-tuned for specific tasks, leveraging knowledge learned from extensive pretraining.

Source: The AI LifeStyle

Though the focus of the original paper was on language translation tasks, several influential models followed to adapt to different kinds of NLP tasks. In 2018, OpenAI introduced GPT (to be later used in ChatGPT), Google developed BERT (to be used in Google Search Engines), followed by a slew of language models (RoBERTa, DALL-E, LLaMA, PaLM, T5, GPT-4, and more) finding applications in text-to-text, text-to-image, text-to-video and text-to-speech generation.

Their ability to capture complex relationships, handle long-range dependencies, and facilitate transfer learning has made them a cornerstone of modern AI research and applications.

Major breakthroughs in Transformer Models

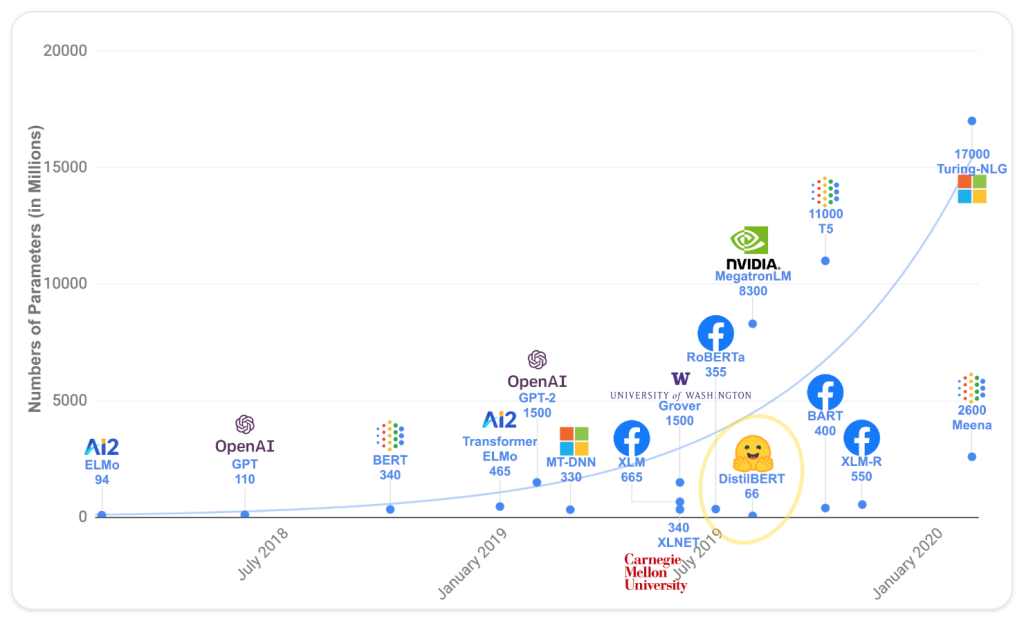

Transformer models, which has become synonymous with language models are relatively much bigger in size as compared to the traditional machine learning models. Researchers in the field have discovered that increasing the model size and augmenting the volume of pre-training data have led to increased model performance. The smaller models are found to be underfitting the data and therefore as model size increases, the performance improves.

The following graph depicts well how the boundaries of transformer models are being pushed in order to achieve better performance. The largest language model that exist today is Megatron-Turing Natural Language Generation (MT-NLG) model with 530 billion parameters is based on transformer architecture.

Source: HuggingFace

Video Explanation

- In this video by Google Cloud Tech, Dale Markowitz, provides an overview of Transformers models in a simple and easy to understand manner. She covers what transformers are, how they work, and why they’re so impactful. She also provides a comparison of Transformer models with RNN, and the latest machine learning models based on transformer architecture. (Runtime: 9 mins)