Related Questions:

– What is Deep Learning? Discuss its key characteristics, working and applications

– Explain the basic architecture and training process of a Neural Network model

– What are the advantages and disadvantages of Deep Learning?

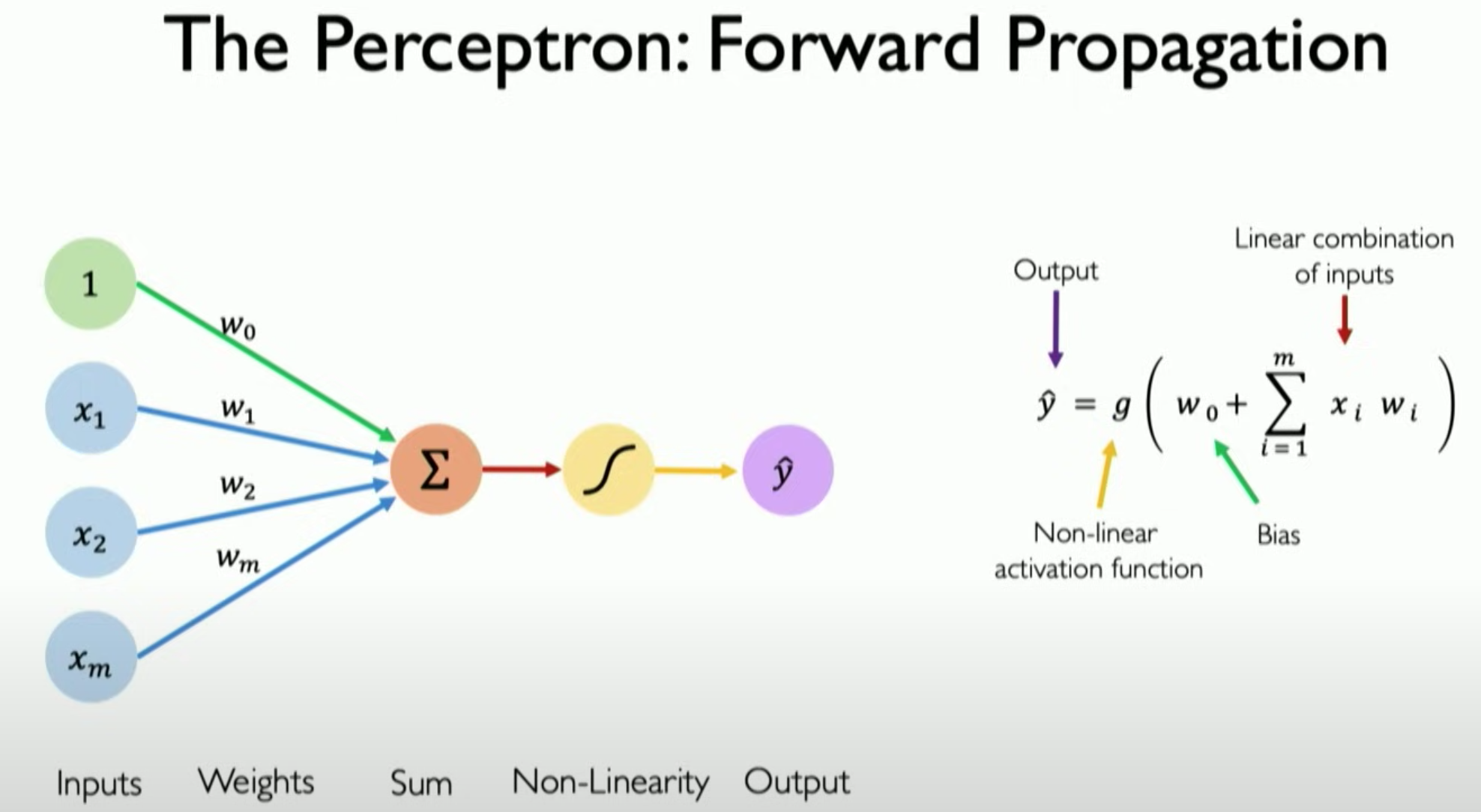

A ‘Perceptron’ is the basic building block, or single node, of a neural network inspired from the neurons that are found in the brain. It operates by taking in a set of inputs, calculating a weighted sum, adding a bias term, and then applying an activation function to this sum to produce an output. The inner working of a perceptron is as follows:

- A vector of x1,……xm inputs is passed to the algorithm

- Weightings w1,……wm are applied to each element of the input vector and a bias is passed along with as represented by w0

- Summation of the input and bias terms is performed

- The above sum is passed to an activation function, g.

- The activation function then returns a output,

, based on which classification decision is taken

, based on which classification decision is taken

Source: MIT Deep Learning Course

In practice, single perceptrons are often used as building blocks for more complex models, such as multi-layer perceptrons (MLPs), which can handle a wider range of problems. The basic Perceptron model can only be used as a classification problem on classes that are linearly separable.

Why do we add bias?

There are three main reasons:

- Assists in achieving a better data fit and learning complex patterns

The bias allows the perceptron to make adjustments to its output independently of the inputs. Bias is like the intercept added in a linear equation, which helps the model in a way that it can fit best for the given data. It allows the network to learn and represent more complex relationships between the input and output variables.

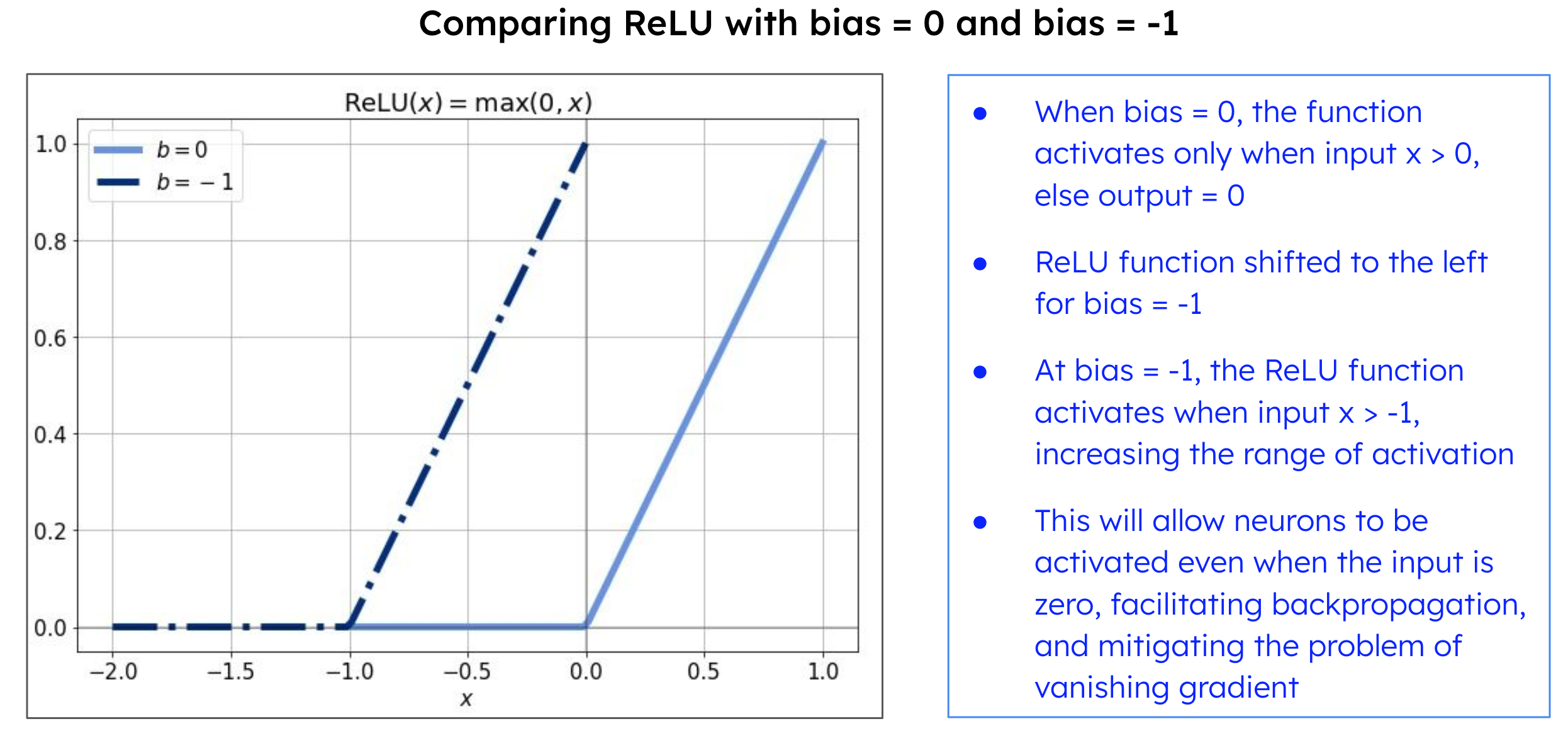

- Handling zero inputs and mitigating the problem of vanishing gradient

Activation functions, such as sigmoid, ReLU introduce non-linearity into the model. Bias terms are added to neural networks to help the models shift the activation function towards the positive or negative side. By adding a bias term, the model can train over points that do not pass through the origin, thereby ensuring that a neuron can activate even when all of its input values are zero.

Source: Baeldung, annotated by AIML.com Research for better representation

- Prevents underfitting and overfitting. Improves generalization

Bias helps to strike a balance between underfitting and overfitting issues in neural networks. Bias allows the neural network to learn more complex patterns in the training data thereby increasing accuracy (reduce underfitting) and generalize better to unseen data (reduce overfitting)

Video Explanation

- In this introductory lecture “The ‘MIT Introduction to Deep Learning” from MIT’s Deep Learning course, Prof. Alexander Amini explains the concept of Perceptron (forward the video to 14 min 30 sec to listen to the concept of Perceptron)