Related Questions:

– Explain the basic architecture and training process of a Neural Network model

– What are the advantages and disadvantages of Deep Learning?

– Explain the Transformer Architecture

Source: AIML.com Research

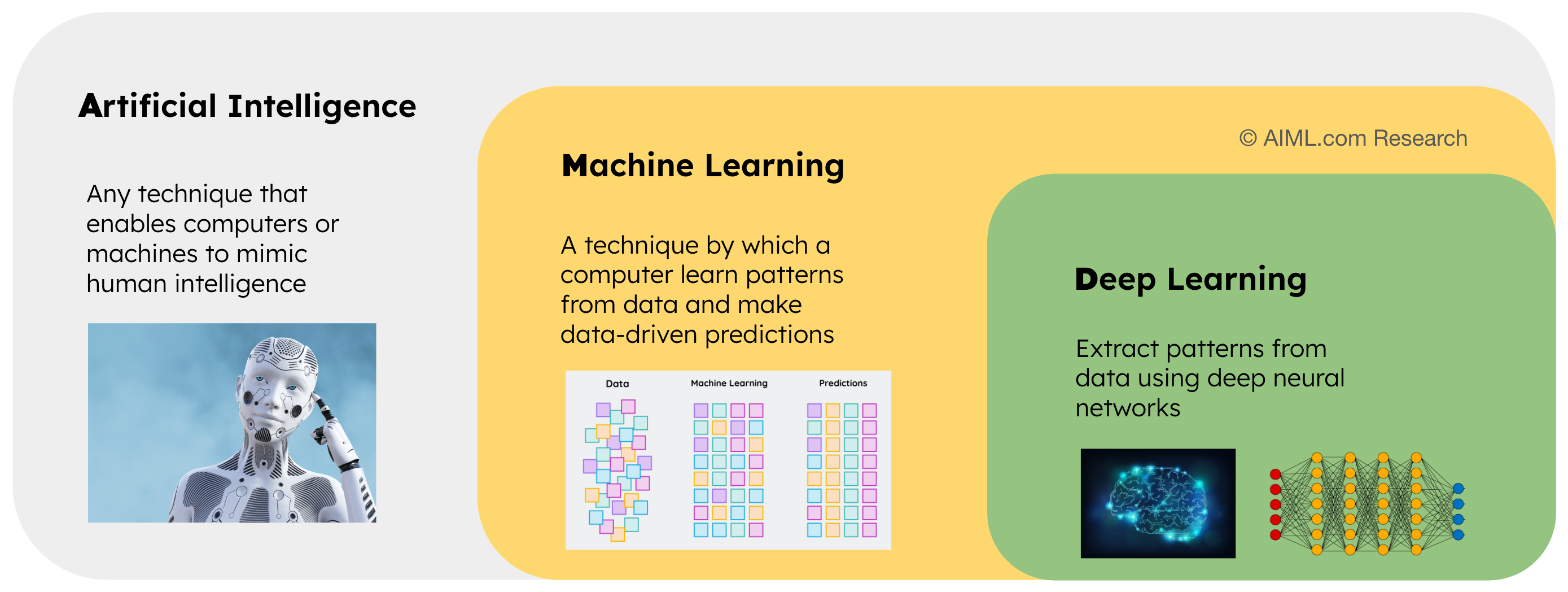

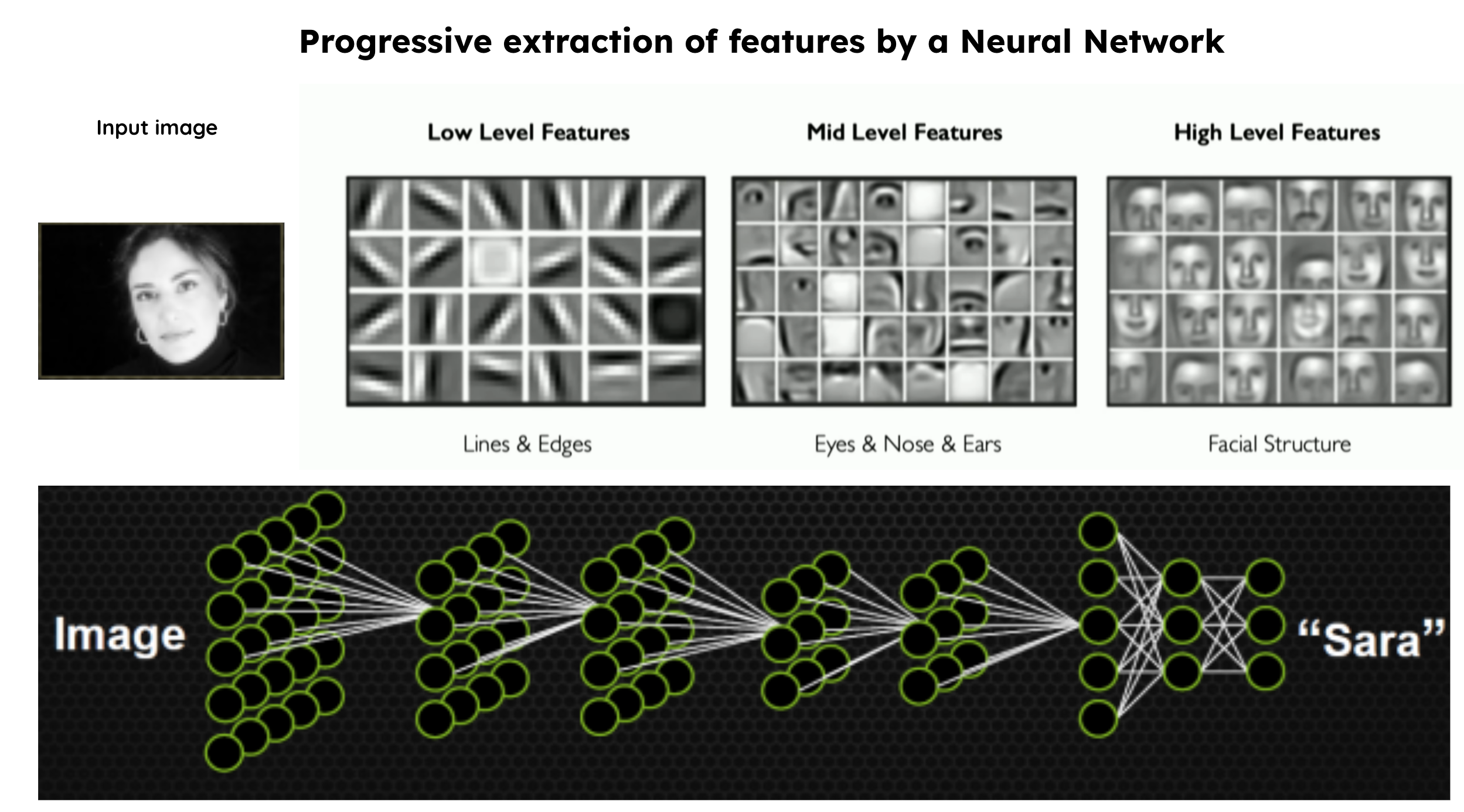

Deep Learning is a subset of machine learning that is characterized by the use of deep neural networks, with multiple layers (hence the term “deep” learning) to perform tasks that typically require human intelligence. It is inspired by the structure and function of the human brain, where each layer of neurons processes and transforms the input data to progressively extract higher-level features.

Deep neural networks (DNNs), consist of interconnected layers of artificial neurons called nodes. Each node receives input from the previous layer, applies a mathematical transformation to it, and passes the transformed output to the next layer. The layers closer to the input are responsible for learning low-level features, while the deeper layers learn more abstract and complex representations.

Source: Nvidia website and MIT Deep Learning Course. Collated by AIML.com for better representation

This phenomenon of automatically learning meaningful and informative features (or representations) from raw data is also referred to as representation learning, which stands as one of the key strengths of DNNs.

Key characteristics and working of Deep Neural Network

Deep learning works by using artificial neural networks, which are composed of layers of interconnected nodes (neurons) that process and transform the data through neural network training.

Key characteristics and working of deep learning include the following:

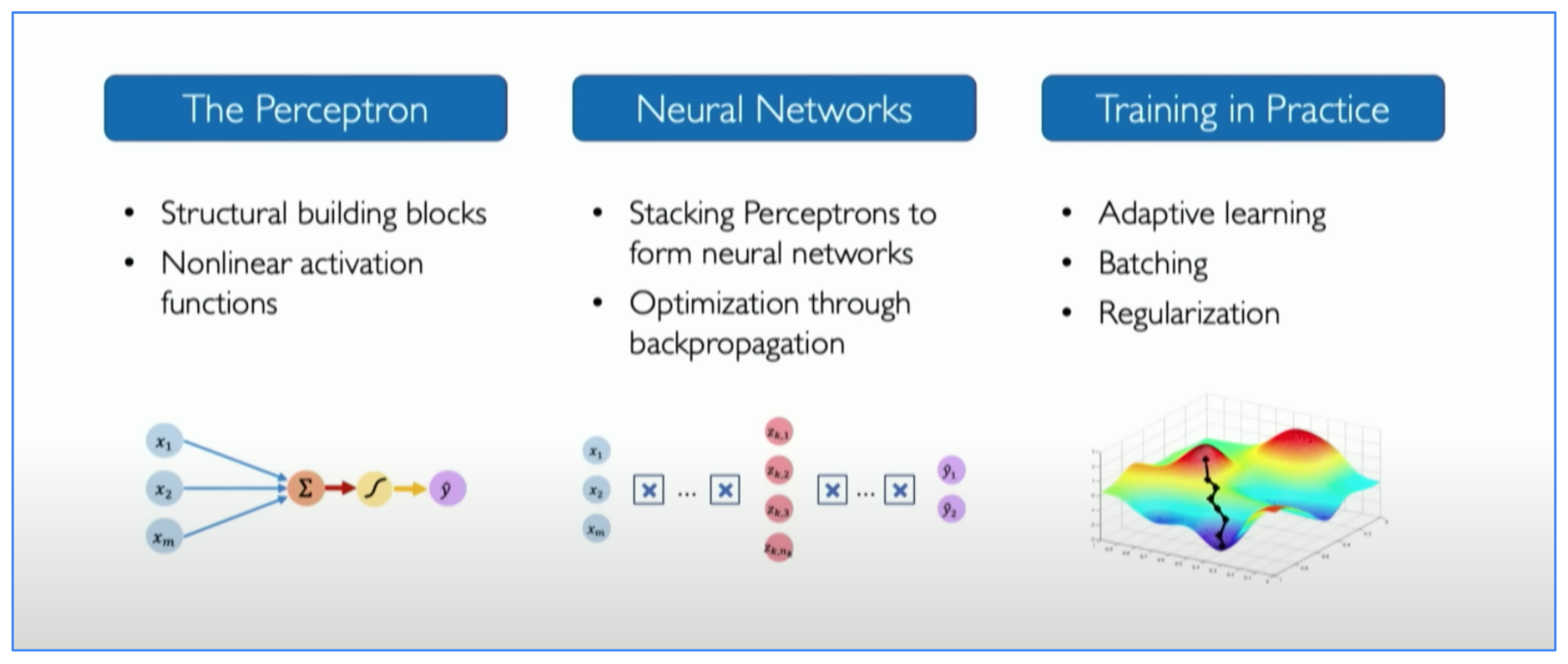

(1) The Perceptron,

(2) Deep architecture,

(3) Neural Networks, and

(4) Training

Source: MIT Deep Learning Course

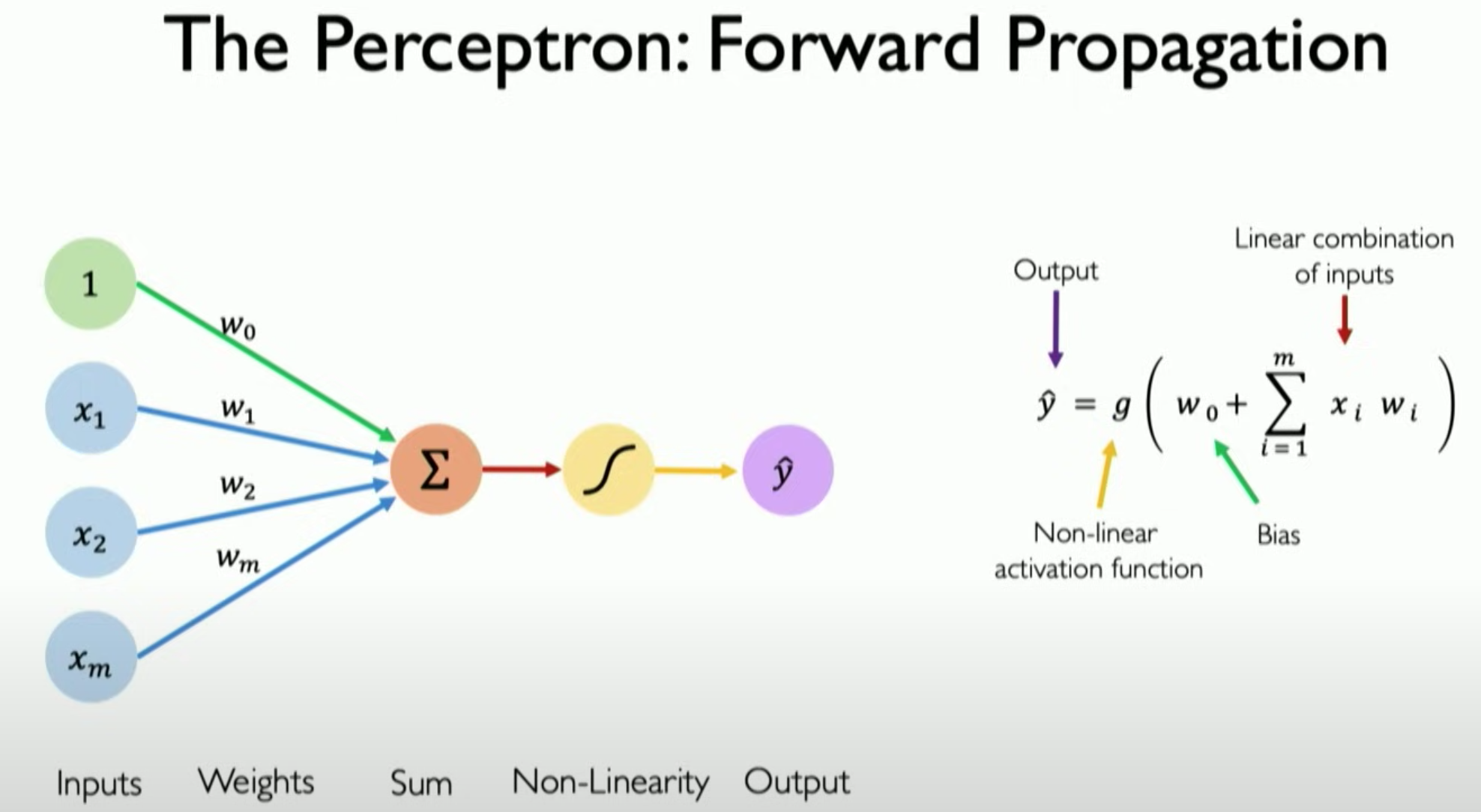

- The Perceptron (Neuron)

A perceptron is the structural building block of a deep learning model. It refers to a simple type of artificial neuron or node in a neural network. It operates by calculating a weighted sum of its inputs, adding a bias term, and then applying an activation function to this sum.

Source: MIT Deep Learning Course

- Deep architecture

The term “deep” refers to the depth of the network, which means it has more than one hidden layer. Deep architectures enable DNNs to learn and represent intricate features from data.

- Neural Networks

Deep learning is built upon the concept of artificial neural networks (ANNs), which comprises of interconnected nodes, called neurons or units, organized into layers: an input layer, hidden layers, and an output layer.

- Training

Deep Neural networks are trained using large datasets. The training process comprises of five main steps: (a) Sampling mini-batch of data and weights initialization, (b) Forward propagation and Loss calculation, (c) Backpropagation and Optimization, (d) Repeat the training loop, and (e) Infer

Deep Learning Models

Deep learning encompasses several key architectures, each designed for specific types of data and tasks. These architectures serve as building blocks for solving a wide range of tasks in artificial intelligence and machine learning. Here are some of the key deep learning architectures:

| S.No. | Deep Learning Models | Key characteristics | Common applications |

|---|---|---|---|

| 1 | Feedforward Neural Networks (FNN) | - Also known as multi-layer perceptrons (MLPs) - Consist of an input layer, one or more hidden layers, and an output layer | Classification, Regression tasks |

| 2 | Convolutional Neural Networks (CNN) | - Designed for structured grid data, such as images and videos - Utilize convolutional layers to automatically learn image features | Image classification, object detection, and image generation |

| 3 | Recurrent Neural Networks (RNN), Long Short-Term Memory (LSTM), Gated Recurrent Unit (GRU) Networks | - Suited for sequential data like time series, text, and speech - Incorporate recurrent connections to maintain memory of previous inputs | Language modeling, speech recognition, and machine translation |

| 4 | Transformer Networks | - Introduced in the context of natural language processing (NLP) - Utilize self-attention mechanisms to process sequences | Machine translation, Text generation (GPT) |

| 5 | Generative Adversarial Networks (GANs) | - Comprise a generator and a discriminator network - Used for generating new data samples that resemble a given dataset | Image generation, style transfer, and data augmentation |

| 6 | Autoencoders | - Designed for unsupervised learning and dimensionality reduction. - Consist of an encoder and decoder to reconstruct input data | Data denoising, anomaly detection, and feature learning |

Applications of Deep Learning

| S.No. | Industry | Deep learning model used for | Example companies |

|---|---|---|---|

| 1 | Healthcare | medical image analysis, disease diagnosis, drug discovery, and personalized medicine | Butterfly network for ultrasound devices, Arterys for heart image analysis, Deepmind by Google for medical diagnosis, Medtronic endoscopy device, Sanofi for disease diagnosis |

| 2 | E-commerce | personalized product recommendations, customer segmentation, and fraud detection | Amazon online, eBay |

| 3 | Finance | financial fraud detection, credit scoring, and algorithmic trading | American Express, Mastercard for fraud detection, Signifyd for anomaly detection, Riskified for consumer finance, JP Morgan |

| 4 | Automotive | self-driving cars for object detection, lane detection, and path planning | Tesla, Rivian, Waymo for self-driving capabilities |

| 5 | Natural Language Processing (NLP) | sentiment analysis, language translation, chatbots, text analysis | ChatGPT, a chatbot by OpenAI, Google translate, Microsoft Bing Search, Google search, Apple News, Meta and X for sentiment analysis, Grammarly |

| 6 | Entertainment | content recommendation systems, video analysis | Netflix, Spotify, YouTube, Amazon Prime |

| 7 | Manufacturing | quality control, predictive maintenance, and supply chain optimization | Toyota in logistics for material handling, ZF Group for quality control, Tesla in supply chain |

| 8 | Marketing | customer segmentation, campaign optimization, and social media analysis | Mailchimp for campaigns, IBM Watson for customer segmentation and advertisements, GumGum for ads |

| 9 | Customer Relationship Management | customer purchase trends, buying pattern, predicting customer support needs | Salesforce Einstein for customer insights, Zoho Zia for business insights |

| 10 | Virtual Assistants | speech recognition, speech to text, transcribing services | Apple Siri, Amazon Alexa, Google Assistant |

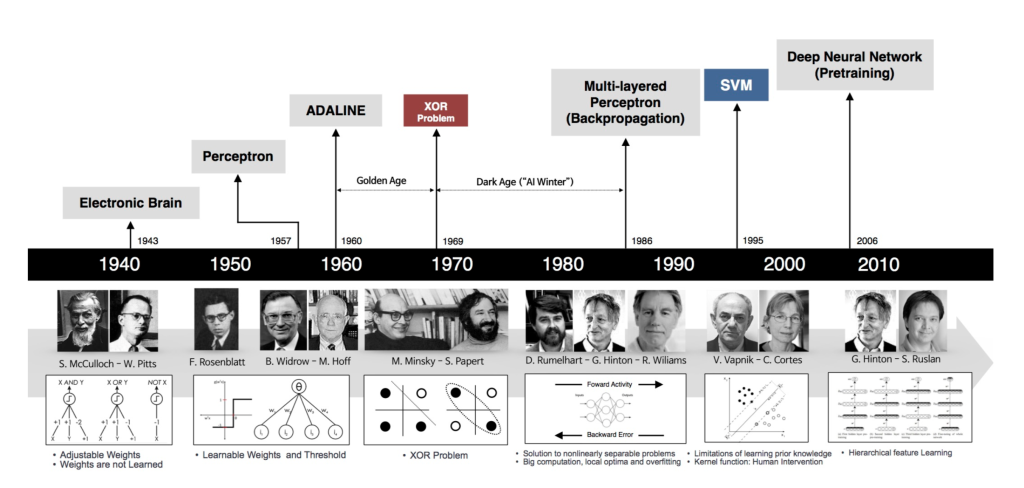

Evolution of Deep Learning: A brief history and Resurgence

A brief history:

Deep Learning might appear as a novel discovery in the field of machine learning, given its recent name and fame. However, the history of Deep Learning spans several decades, dating back to 1940s as presented below:

History of Deep Learning

Source: Deep Learning blog by Beamlab

1950s –> Alan Turing, a British mathematician, first presented the idea that computers would achieve human-level intelligence

1957 –> Frank Rosenblatt, an American psychologist, introduced the perceptron, a single-layer neural network

1965 –> Alexey Ivakhnenko, a Soviet mathematician, created a small functional neural network

1970s –> Limited progress, referred to as the AI winter

1980s –> Backpropagation, a method for training neural networks, was rediscovered by Dr. Geoffrey Hinton, a British-Canadian psychologist and computer scientist

1989 –> Yann LeCun’s invents machine that can read handwritten digits

1990s –> Multi-layer perceptrons, the inception of CNNs, and LSTM

1999 –> GPUs (Graphics Processing Units) were developed

2000s –> Limited progress in the field of Deep Learning

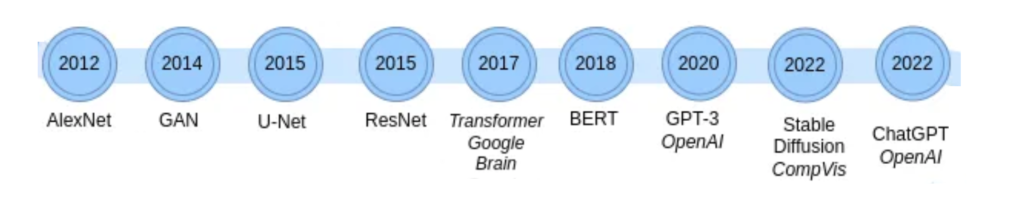

2012 –> Deep neural network, AlexNet, outperformed other methods for image recognition and led to the resurgence of Neural Network. Several notable neural network models and frameworks followed

2017 –> Introduction of Transformer architecture, a game-changer in the field of Deep Learning models for solving Natural Language Processing tasks

2018 onwards –> Revolution in the AI space took place with the introduction of BERT, GPT-3, Stable Diffusion models, and systems such as ChatGPT, Bard, Perplexity etc.

Resurgence of Neural Networks:

The resurgence was catalyzed by three key factors:

- Big data

The digital age brought about an unprecedented amount of data. Deep learning models thrive on vast datasets, and having access to such data allowed for more effective training of deep neural networks.

- Hardware (GPU):

Neural networks are commonly trained on massive datasets, and often comprises of millions to billions of parameters. The introduction of Graphics Processing Units (GPUs) has been instrumental in facilitating this complex computation by offering accelerated processing power and parallel computing capabilities. Unlike Central Processing Units (CPUs), which have a limited number of cores capable of handling a few software threads at a time, GPUs consist of hundreds of cores capable of simultaneously managing thousands of threads. The increased availability of high-performance GPUs at affordable prices has played a pivotal role in the popularity and success of deep learning.

- Software

- Breakthrough in Deep Learning architectures such as Transformers for Language Modeling, CNNs for Computer vision, made it possible to handle complexities in deep neural network and train them effectively

- Development of Deep Learning frameworks such as Pytorch, Tensorflow made it easier for developers to work with deep networks

- Breakthrough in Deep Learning architectures such as Transformers for Language Modeling, CNNs for Computer vision, made it possible to handle complexities in deep neural network and train them effectively

Video Explanation (Playlist):

This playlist contains the following videos in the recommended order:

- “The ‘MIT Introduction to Deep Learning’ is an introductory lecture from MIT’s Deep Learning course, taught by Alexander Amini and Ava Amini. It explores the meaning of deep learning, its significance, applications, and the fundamentals of neural network training and regularization. This video provides a well-rounded understanding of deep learning and neural networks.

- The video ‘But what is a neural network?’ by 3Blue1Brown explains the concept of deep learning using an image recognition example. The video helps you understand how a deep learning model learns for real-world applications.