The F1 Score is the harmonic mean between precision and recall.

One of the problems in binary classification is that it is impossible to optimize either precision or recall without adversely affecting the other. This is the general tradeoff between false positives and false negatives, or Type I and Type II error. For example, maximizing the recall would require minimizing the number of false negatives, yet creating a decision threshold that does so would subsequently lead to more false positives and thus a lower precision score.

The F1 score is a weighted average of both the precision and recall, and if the each is given equal weight, the formula reduces to:

F1 = 2 * (precision * recall) / (precision + recall)

If either precision or recall is deemed to be more important for a specific classification task, they can be weighted accordingly.

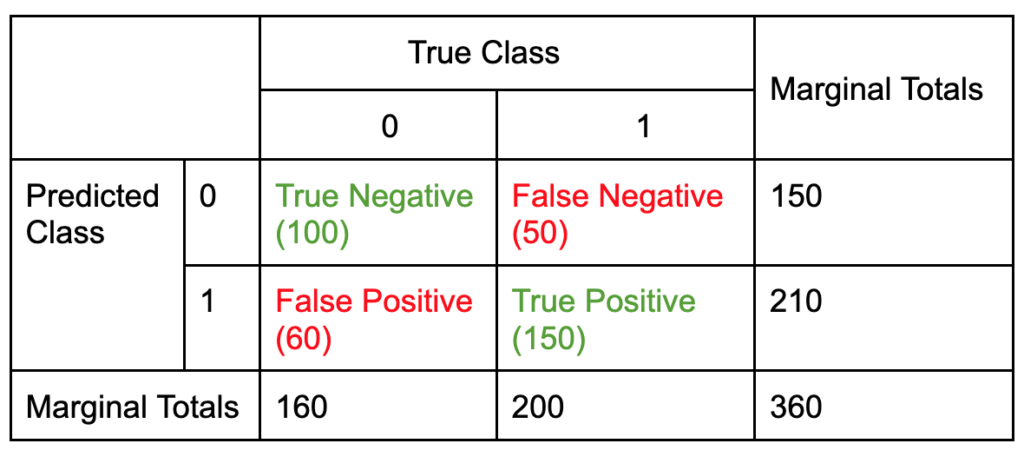

Using an example:

Precision = True Positives / (True Positives + False Positives)

Recall = True Positives / (True Positives + False Negatives)

F1 Score = 2 * (precision * recall) / (precision + recall)

Precision = 150/(150 + 60) = .714

Recall = 150/(150 + 50) = .75

F1 Score = 2 * (0.714 * 0.75) / (0.714 + 0.75) = 0.179