After confirming that the fitted model meets the assumptions necessary for linear regression, the next step of a regression analysis is usually to evaluate how well the model is performing in terms of fit and accuracy. Information Criteria can be used for that purpose.

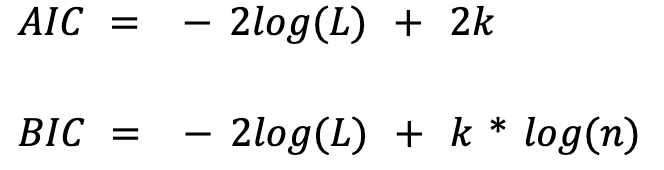

Information Criteria is a more modern selection measure. Measures such as AIC and BIC, attempt to balance fit and complexity through a calculation based on the penalized log-likelihood. The values for these metrics have no meaning unless used when comparing between different models. In the case of information criteria, lower values are always preferred. The only difference between the formulas for AIC and BIC lies in the penalty component for parameters, as both are based on the log likelihood (L) calculation.

AIC: Akaike information criterion

BIC: Bayesian information criterion