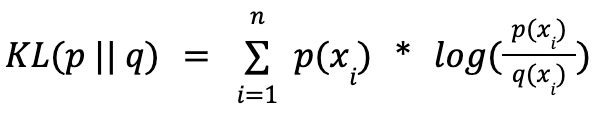

The Kullback-Leibler (KL) Divergence is a method of quantifying the similarity between two statistical distributions. It has applications in both supervised learning, where it can be used as a loss function to measure actual labels against a distribution of predicted labels, as well as in unsupervised learning in algorithms such as Gaussian Mixture Models that rely on the Expectation-Maximization technique. For two distributions p and q, the KL Divergence is given by

The KL Divergence is 0 if the two distributions p and q are identical to each other and increases in value as the distributions become more different. Also, it is not symmetric, meaning KL(p || q) is not always equal to KL (q || p), and thus it is not a proper distance metric.