Precision measures the proportion of observations that the algorithm predicts to be positive that actually are positive labels. In general, precision is calculated by:

Precision = True Positives / (True Positives + False Positives)

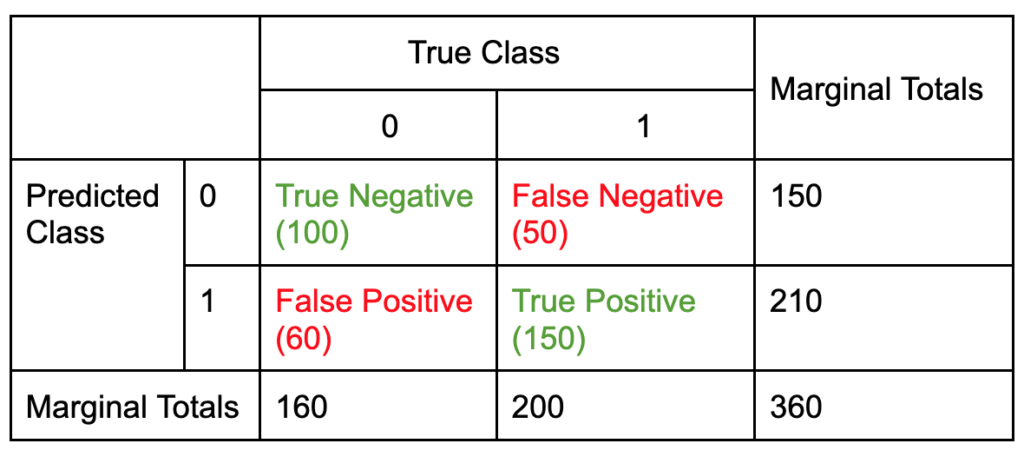

Using an example:

Precision = 150 / (150 + 60) = .714