Related Questions:

– What is Gradient Boosting (GBM)?

– What is XGBoost? How does it improve upon standard GBM?

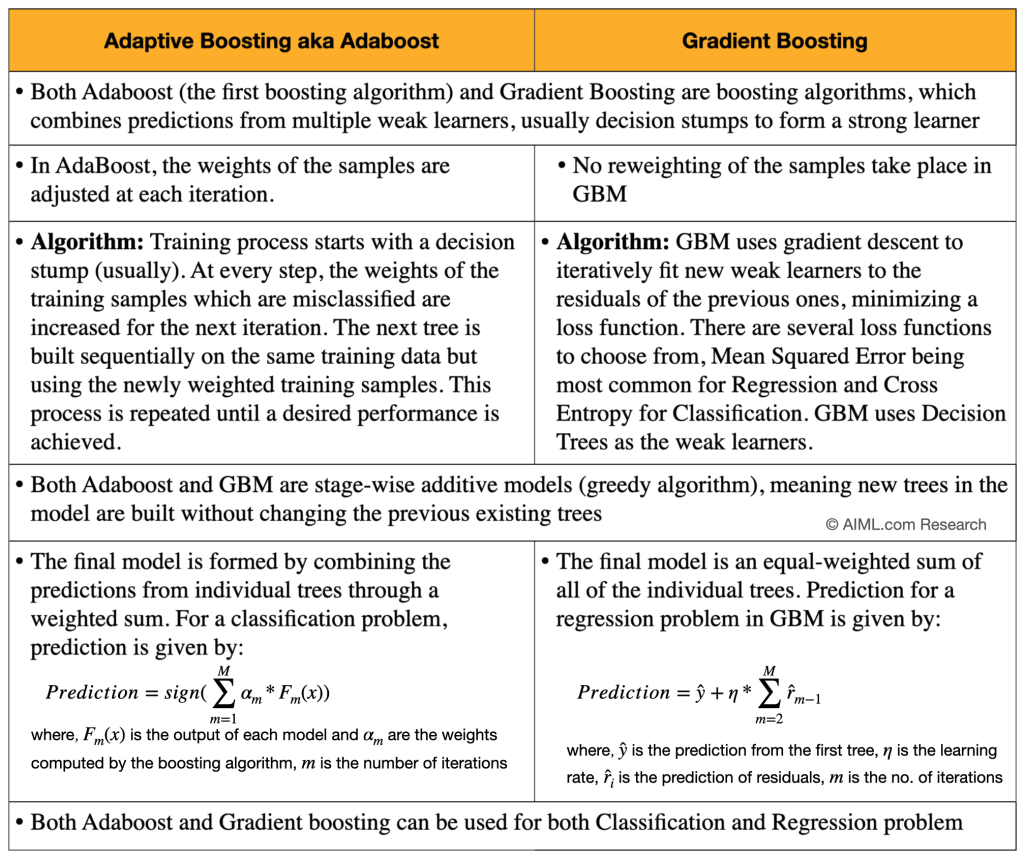

AdaBoost and Gradient Boosting (GBM) are both ensemble learning techniques that combine multiple weak learners to create a stronger model, but they differ in their approach to building the ensemble and updating the weights of the instances in the dataset. The key differences between Adaboost and Gradient Boosting are shown in the table below:

Adaboost (Adaptive Boosting) was developed in 1995 by Yoav Freund and Robert Schapire. It was the first Boosting algorithm that saw huge success. AdaBoost was originally created for binary classification problems and later versions supported multi-class classification, and Regression problems too.

In the recent times, modern Boosting algorithms such as GBM and its offshoots (LightGBM, CatBoost, XGBoost), have gained popularity due to its flexibility, robustness and superior performance. XGBoost specifically have become more popular as the performance of XGBoost surpassed others in Kaggle competitions in last few years. Please check out the post on Gradient Boosting and XGBoost for a deeper understanding on those topics